Introduction

Understanding BM-25: A Powerful Algorithm for Information Retrieval

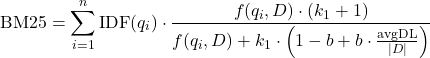

Bm25 is an enhancement of the TF-IDF model that incorporates term frequency saturation and document length normalization to improve retrieval performance.

When it comes to search engines and information retrieval, a vital piece of the puzzle is ranking the relevance of documents to a given query. One of the most widely used algorithms to achieve this is the BM25, Best Matching 25. BM25 is a probabilistic retrieval function that evaluates the relevance of a document to a search query, balancing simplicity and effectiveness, making it a popular choice in modern search engines and applications.

BM25 is essentially a scoring function that calculates a numerical score to estimate the relevance of a document for a given query. This score is based on the occurrences and importance of query terms within the document. The higher the score, the more relevant the document is considered to be.

Where:

( q_i ) is a term in the query.

( f(q_i, D) ) is the frequency of term ( q_i ) in document ( D ).

( |D| ) is the length of document ( D ) (number of words).

( {avgDL} ) is the average document length in the corpus.

( k_1 ) and ( b ) are free parameters, where:

( k_1 ) controls the term frequency saturation, with typical values around 1.2 to 2.

( b ) controls the degree of document length normalization, typically set to 0.75.

( {IDF}(q_i) ) is the inverse document frequency of term ( q_i ).

The IDF function adjusts term weight based on its distribution across documents, giving more importance to rarer terms.

Key Concepts in BM25

BM25 improves upon traditional retrieval models by considering several essential aspects:

- Term Frequency (TF): The frequency of a term in the document directly impacts relevance. BM25, however, includes a saturation factor, meaning that additional occurrences of a term add less weight past a certain point, avoiding overemphasis on highly frequent terms.

- Inverse Document Frequency (IDF): BM25 uses IDF to balance term frequency by considering how rare or common a term is across documents in the corpus. This way, unique terms in a document are weighted more heavily than common terms.

- Document Length Normalization: BM25 incorporates a normalization factor to control the impact of document length on term frequency, which ensures that long documents are not unfairly penalized or favored.

- Adjustable Parameters (k1 and b): BM25 allows flexibility with its two main parameters:

- k1 adjusts term frequency scaling, with higher values meaning more emphasis on term frequency.

- b is a document length normalization parameter that allows BM25 to adapt to different types of datasets.

Advantages of BM25

- Term Saturation: Prevents excessively high scores for very frequent terms by introducing saturation.

- Length Normalization: Adjusts scores based on document length, reducing bias towards longer documents.

- Performance: Generally outperforms TF-IDF in retrieval tasks due to its more sophisticated modeling.

Practical Applications of BM25

BM25 is widely applied in fields where accurate and relevant retrieval is essential, including:

- Search Engines: BM25 is fundamental in search engine technology, powering both traditional search engines and modern engines like Elasticsearch and Solr, which are used in e-commerce, content management, and enterprise applications.

- Document Retrieval Systems: Many document-heavy systems, such as research databases, use BM25 to efficiently rank academic papers, reports, and other documents according to user queries.

- Social Media and News Retrieval: BM25 can help prioritize content that matches user interests by surfacing posts, articles, or news stories that best match user searches.

Code for implementing BM-25

import math

# BM25 parameters

k1 = 1.5

b = 0.75

# Example document collection and query

corpus = [

"the brown fox jumped over the brown dog",

"the lazy dog sat in the sun",

"the quick brown fox leaped over the lazy dog"

]

query = ["brown", "fox"]

# Pre-compute average document length

avg_doc_length = sum(len(doc.split()) for doc in corpus) / len(corpus)

# Function to calculate term frequency in a document

def term_frequency(term, document):

return document.split().count(term)

# Function to calculate document frequency for a term

def document_frequency(term, corpus):

return sum(1 for doc in corpus if term in doc)

# BM25 function

def bm25_score(query, document, corpus):

score = 0

doc_length = len(document.split())

for term in query:

tf = term_frequency(term, document)

df = document_frequency(term, corpus)

idf = math.log((len(corpus) - df + 0.5) / (df + 0.5) + 1)

score += idf * ((tf * (k1 + 1)) / (tf + k1 * (1 - b + b * (doc_length / avg_doc_length))))

return score

# Calculate BM25 scores for each document

for doc in corpus:

print(f"Document: {doc}")

print(f"BM25 Score: {bm25_score(query, doc, corpus)}")

Results for Query: [“brown”, “fox”]

Document: the brown fox jumped over the brown dog

BM25 Score: 1.1414373853110722

Document: the lazy dog sat in the sun

BM25 Score: 0.0

Document: the quick brown fox leaped over the lazy dog

BM25 Score: 0.889947700346955Instead of manually implementing BM25 calculations, we can use python package

from rank_bm25 import BM25Okapi

import pandas as pd

# Sample corpus

corpus = [

"the brown fox jumped over the brown dog",

"the lazy dog sat in the sun",

"the quick brown fox leaped over the lazy dog"

]

# Tokenize the corpus

tokenized_corpus = [doc.split() for doc in corpus]

# Initialize BM25

bm25 = BM25Okapi(tokenized_corpus)

# Query example

query = "dog in sun"

tokenized_query = query.split()

# Get BM25 scores for the query

scores = bm25.get_scores(tokenized_query)

print("BM25 Scores:\n", scores)But with this we get results like

BM25 Scores:

[-0.14521689 0. -0.11322166]If we use bm25 = BM25L(tokenized_corpus), we get scores

BM25 Scores:

[2.05626588 0. 1.14044998]We can read more about this from this paper: https://www.cs.otago.ac.nz/homepages/andrew/papers/2014-2.pdf

In the BM25 model, negative scores are unusual because BM25 is usually designed to produce non-negative relevance scores. However, there can be a few reasons why we are observing negative scores in this particular case:

Libraries like rank_bm25 are optimized for larger corpora and may use precision adjustments that lead to small differences in final scores. For a small corpus, as in your example, these adjustments can result in noticeable differences in scores and may even lead to negative values if the library uses scaling that expects a larger corpus size.

https://pypi.org/project/rank-bm25 This library has many implementations of BM25.

There are more advanced techniques also like BM42 – https://qdrant.tech/articles/bm42/

Conclusion

BM25 remains one of the most efficient and effective ranking functions for information retrieval, especially in text-based search engines and large datasets. Its balance of term frequency, document length normalization, and adjustable parameters makes it flexible and adaptable across domains. By combining the fundamentals of probabilistic retrieval with an intuitive approach to term weighting, BM25 continues to be the go-to choice for modern search systems and beyond.