Introduction

Large Language Models (LLMs) are the powerhouses behind cutting-edge AI applications like chatbots and text generation tools. These complex models have traditionally relied on high-performance GPUs to handle the massive amounts of computation involved. But what if that wasn’t necessary? Recent breakthroughs, like the BitNet B1.58 model, hint at a future where LLMs can thrive without the need for expensive, power-hungry GPUs.

The Problem with Floating-Point Precision

Most LLMs today rely on floating-point numbers (e.g., 32-bit or 16-bit) to represent the complex data they process. While powerful, these representations require significant computational resources, which is where those powerful GPUs come in. But what if we could change the rules of the game?

Introducing BitNet B1.58: Embracing Ternary Efficiency

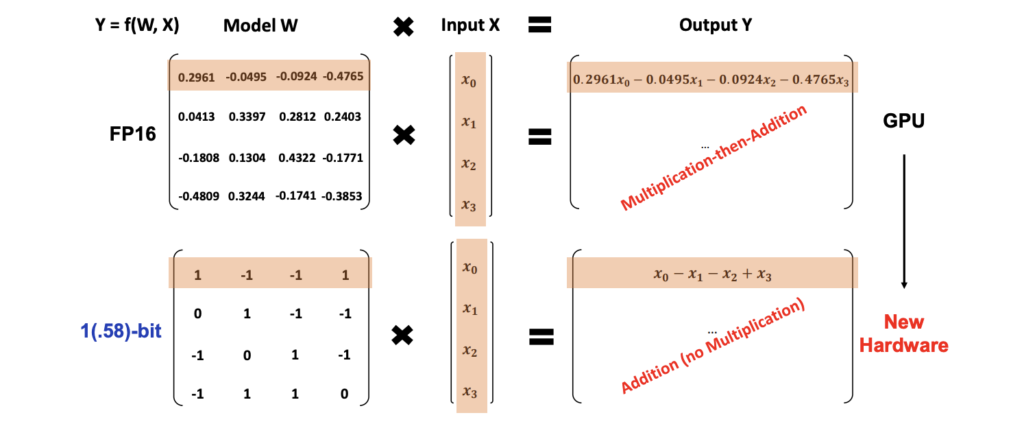

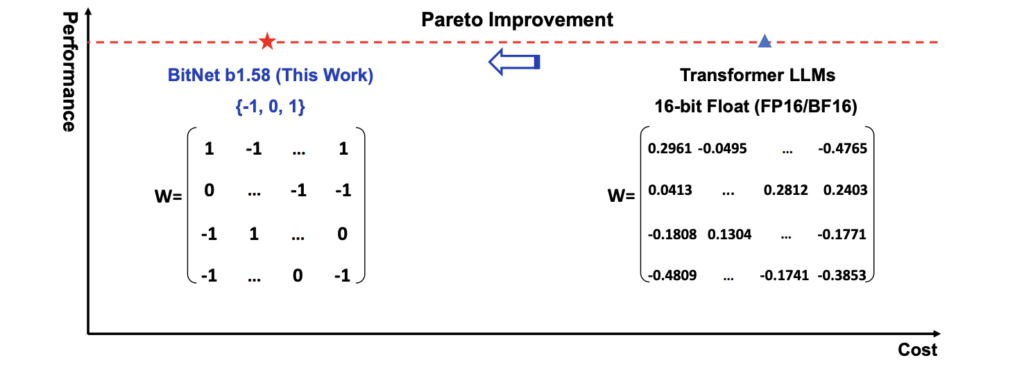

BitNet B1.58 is a groundbreaking LLM that challenges the status quo. Instead of using traditional floating-point numbers, it represents its parameters using ternary values (-1, 0, +1). This simple shift has profound implications:

- Reduced Computational Complexity: By switching to ternary values, multiplication operations within the model can be replaced with simpler addition operations. This significantly reduces the computational burden.

- Hardware Innovation: The elimination of complex multiplication opens the door to designing new types of hardware specifically optimized for this kind of computation.

- Efficiency and Accessibility: BitNet B1.58 promises more efficient, accessible models that consume less power and can potentially run on a wider range of devices, including edge devices and smartphones.

But Does it Work?

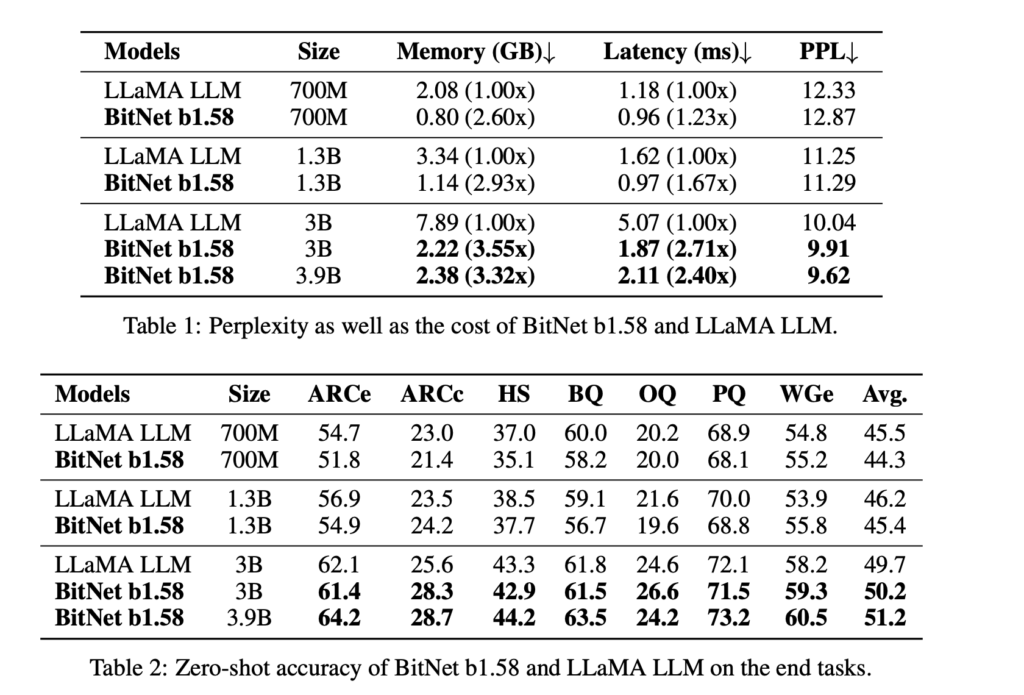

The big question is whether this efficiency comes at the cost of accuracy. Surprisingly, BitNet B1.58 has been shown to match the performance of traditional floating-point LLMs of similar size. This means it can be just as good at language understanding and generation tasks, all while being significantly more resource-efficient.

As you can see, BitNet b1.58 performed almost similar to the original model.

Real-World Impact

The potential impact of BitNet B1.58 and other similar advancements is huge:

- Democratizing AI: More accessible LLMs could allow developers with limited resources to build powerful AI applications.

- AI on the Edge: Efficient LLMs could enable AI capabilities on devices with limited compute power, such as smartphones and IoT devices.

- Greener AI: Reducing energy consumption makes AI development more sustainable.

This could revolutionize the field, making AI more accessible, powerful, and environmentally friendly.

For more details check the paper : 2402.17764.pdf (arxiv.org)