Introduction to MCP

The Model Context Protocol (MCP) is an open standard for connecting AI assistants (like large language models) to the systems where data and tools live

In essence, MCP aims to bridge the gap between isolated AI models and real-world data sources – think of it as a “USB-C for AI applications”, providing a universal way to plug an AI model into various databases, file systems, APIs, and other tools.

By standardizing these connections, MCP replaces a tangle of custom integrations with a single protocol, making it simpler and more reliable to give AI systems access to the data they need. This leads to more relevant and context-aware responses from models, since they can securely fetch documents, query databases, or perform actions as needed.

Why was MCP created?

As AI assistants became more capable, developers faced a proliferation of one-off “plugins” or connectors for each data source or service. Every new integration (be it your company’s knowledge base, a cloud app, or a local tool) traditionally required custom code and unique APIs, leading to fragmented solutions that are hard to scale

MCP addresses this by providing a universal protocol: developers can expose data or functionality through a standard interface, and AI applications can consume that interface without bespoke adapters.

The goal is a more sustainable ecosystem where AI systems maintain context across different tools seamlessly.

Anthropic open-sourced MCP in late 2024, and it has quickly gained industry support with early adopters like Block and Apollo integrating it, and developer tools companies (Zed, Replit, Codeium, Sourcegraph, etc.) working to enhance their platforms with MCP.

Key Components and Use Cases of MCP

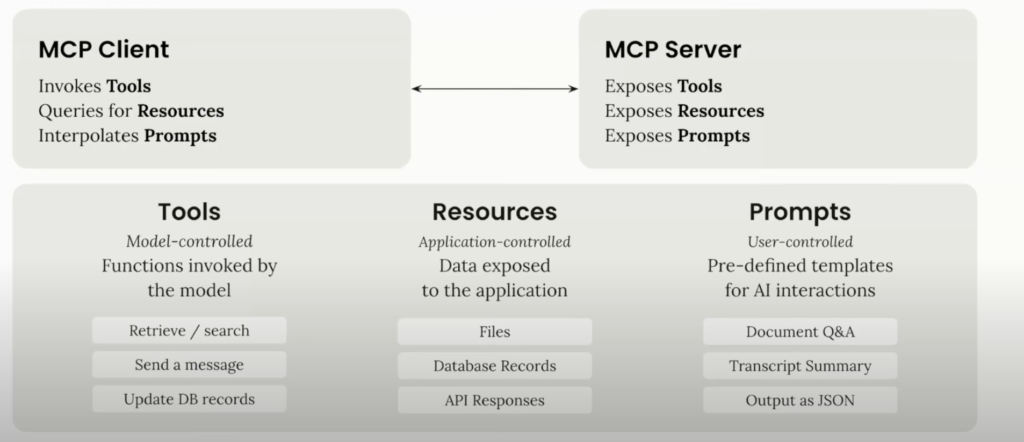

MCP follows a client–server architecture with three main components

MCP Host: This is the AI-powered application or environment that needs access to external data. Examples of hosts include chat interfaces (like Claude’s chat app or Microsoft’s Copilot Studio), AI-enhanced IDEs (e.g. Zed editor or Sourcegraph Cody), or other AI agents. The host is where the user interacts with the AI model. It initiates connections to one or more MCP servers to extend the model’s capabilities.

MCP Client: The client is a connector library running inside the host application that manages the 1:1 connection with an MCP server.

It speaks the MCP protocol, sending requests to servers and receiving responses. In practice, the MCP client is usually provided by an SDK (for example, Anthropic’s Claude Desktop includes an MCP client, and Microsoft’s Copilot Studio uses a connector) that knows how to communicate with any MCP-compliant server.

MCP Server: The server is a lightweight program or service that exposes specific data or capabilities through the standardized MCP interface. An MCP server acts as a bridge between the AI agent and a data source or tool – it could interface with local files, a database, an external API, a code repository, etc., and present those as “resources” or “actions” to the AI. Developers either run existing MCP servers or build new ones to connect their particular data systems to AI models.

How these components interact

A host (with an AI model) can connect to multiple MCP servers at once, giving the model access to a variety of tools and context. For example, a coding assistant might connect to a GitHub server (to retrieve code or file history), a database server (to fetch query results), and a filesystem server (to read local project files).

Each server communicates with the host via the MCP client protocol, using a common messaging format. The host can then allow the AI model to retrieve information or invoke operations on those servers in a controlled manner (often requiring user approval before executing potentially sensitive actions).

Common use cases

MCP’s flexibility means it can be applied anywhere an AI needs external knowledge or the ability to act. Some scenarios enabled by MCP include:

Coding assistants and IDEs: An AI coding assistant can use MCP to access your project’s codebase, version control, and documentation. For instance, an MCP Git server can let the AI list repositories, read code files, or perform Git operations; a GitHub MCP server can interface with GitHub’s API for issues and pull requests. This is already being used in developer tools like Claude’s IDE integration, Replit, and Sourcegraph to provide context-specific code suggestions and even make code changes with user oversight

Data analysis and databases: With an MCP database server (e.g. a PostgreSQL or SQLite connector), an AI agent could run read-only queries on a company database and retrieve results. This allows the model to answer analytical questions or generate reports based on live data, without exposing the entire database directly. The MCP server enforces access controls and schema limitations.

Document retrieval and knowledge bases: MCP servers exist for tools like Google Drive, Notion, or local filesystems, enabling an AI assistant to search documents and read their contents when answering a question

The server might expose documents as resources (read-only content) that the AI can request. Similarly, a “Memory” MCP server can provide a long-term knowledge store or vector search (e.g. using a tool like Qdrant or Weaviate for semantic memory).

DevOps and cloud automation: Some community-built MCP servers connect to infrastructure tools – for example, a Docker MCP server to manage container tasks, or a Kubernetes server to control clusters.

An AI agent with access to these could assist in deployment or monitoring tasks (again, only with explicit user permission on each action). Official integrations by companies like Cloudflare and Stripe indicate MCP servers can also manage cloud resources or payment workflows securely

Productivity and communication: There are MCP connectors for Slack (to read channel messages or post updates), Todoist (to manage tasks), and even calendar or maps services.

Overall, MCP fosters an ecosystem of interchangeable integrations. A tool exposed via an MCP server can be used by any AI application that supports MCP, and vice versa – an AI app can tap into a growing library of pre-built MCP servers. This means less reinventing the wheel: instead of writing a custom integration for each new AI agent or platform, a developer can build one MCP server and have it work across many AI systems.

Technical Specifications and Architecture of MCP

At its core, MCP is built on JSON-RPC 2.0 as the message format for communication

Transport layer

The transport layer handles the actual communication between clients and servers. MCP supports multiple transport mechanisms:

- Stdio transport

- Uses standard input/output for communication

- Ideal for local processes

- HTTP with SSE transport

- Uses Server-Sent Events for server-to-client messages

- HTTP POST for client-to-server messages

SSE transport is useful when the server can’t easily run as a child process of the host – for example, if it’s a remote web service or needs to serve multiple clients. SSE provides unidirectional streaming from server to client (so the server can stream results or real-time updates), while requests from the client come via standard HTTP posts.

Capabilities – resources and tools

An MCP server can provide two broad kinds of things to an AI: contextual data (called Resources) and actions (called Tools). These are collectively referred to as the server’s capabilities in the MCP specification. When the server starts, it usually identifies which capabilities it supports so the client knows what it can do (for example, a pure read-only data server might expose resources but no tools, or vice versa).

Resources are pieces of data or content that the server exposes for reading.

Think of resources as the knowledge or context the server makes available. This could be the contents of a file, a row from a database, the text of an email, an image file, etc. Each resource is identified by a URI (e.g. file:///path/to/file.txt or postgres://server/db/schema/table) and has some data associated with it

Clients can request resources from the server – typically via a method like resources/read or by referencing the URI – and the server will return the content (text or binary).

Resources are meant to be determined by the client or user: for example, a user might choose which documents an AI assistant should load as context. Some MCP clients require explicit user selection of resources (to maintain control). Because resources are passive (just data), if you want the AI to automatically leverage some context without user selection, you might instead package it as a tool that the AI can actively call.

Tools are operations or functions that the server can perform on behalf of the AI – essentially executable actions exposed via the protocol

Tools let the AI do things in the external system: run a query, send a message, create a file, etc. Each tool has a name, a human-readable description, and an input schema defining what parameters it accepts.

The input schema is expressed in JSON Schema, allowing the host (and even the AI model) to understand what arguments are required. For example, a database query tool might have a schema with a "query" string parameter, or a calendar-scheduling tool might require a "date" and "title". Tools are discovered by the client via a standardized tools/list request – the server responds with the list of available tool names, descriptions, and input schemas.

To invoke a tool, the client sends a tools/call request with the tool name and a JSON object of arguments, then the server executes the action and returns the result (or an error)

Results can be simple confirmations, or data produced by the action (for instance, a tool that reads a file might return the file content as a result, which blurs the line with “resource” – indeed the concepts overlap; tools can return resource data, but the key is tools are initiated by the AI). Because tools can have side effects or reach sensitive data, MCP is designed for human oversight: the AI model may suggest using a tool, but typically the client (or the user in the loop) must approve it before it’s executed.

Prompts and others: In addition to raw data and actions, MCP also defines other capabilities like Prompts (reusable prompt templates or instructions that servers can provide) and advanced flows like Sampling (which allows a server to ask the client’s AI model to generate a completion, effectively letting the server “consult” the AI). For example, a server could have a built-in prompt for summarizing a document: the host can request that prompt and fill it with data.

Security and scope

MCP is designed with security in mind, acknowledging that giving an AI access to external systems can be risky. The protocol encourages a few best practices to mitigate issues:

Roots (Context Boundaries): When a host connects to a server, it can specify “roots” – essentially the scope or boundaries within which the server should operate. For example, a filesystem server might be given a root of file:///home/user/project

Or an API-based server might be rooted to a particular endpoint or tenant. Roots help the server know what is “in bounds” and keep context focused. They are not an absolute security sandbox (the server could ignore them, but well-behaved servers honor the roots) – they serve as guidance from the client about relevant resources and as an extra layer of clarity on allowed operations

Human-in-the-loop approvals: As mentioned, tool invocation usually requires user approval in practice. MCP’s role is to present the action and its parameters in a structured way (via the tool schema), so the host UI can ask the user for confirmation. Only once approved does the server actually execute the action. This ensures the AI can’t, say, delete files or send emails unless the user explicitly okays it.

Access controls in servers: The MCP servers themselves often enforce their own security. For example, an MCP server for GitHub will require an access token to be provided (via environment variable or config) to authenticate to the GitHub API

A filesystem server might run with the permissions of the local user and have an allow-list of directories it will serve. By centralizing these rules in the server implementation, organizations can tightly control what an AI agent can do.

Tools, Frameworks, and Libraries for Building MCP Servers

One of the advantages of MCP being an open standard is that there are already official SDKs and open-source libraries to help you implement it. You don’t have to write the JSON-RPC handling or protocol logic from scratch – you can use a provided framework in the language of your choice.

Available SDKs and languages: The MCP project (led by Anthropic and community contributors) provides SDKs in multiple languages, including TypeScript/JavaScript, Python, Java, Kotlin, and C#.

These SDKs offer base classes for an MCP Server and Client, utilities for defining tools/resources, and support for the standard transports. In practice, most developers use TypeScript (Node.js) or Python for MCP server development, as these were the first and most fully featured SDKs (many of the reference servers are implemented in one of these).

TypeScript/Node: Using the TypeScript SDK, you can build an MCP server as a Node.js application. The SDK integrates well with frameworks like Express if you’re using the SSE transport (for example, setting up an Express app with an /events endpoint for SSE and a POST endpoint for incoming messages

Additional tools for development: During development of an MCP server, you might find the following useful:

- MCP Inspector: an interactive debugging UI for MCP, which can connect to your server and let you test requests and see messages in real time

Step-by-Step: Creating an MCP Server

Initialize the MCP server instance. In your code, import the SDK and create a server object. Typically, this involves specifying the server’s name, version, and what capabilities you plan to use. For example, in TypeScript:

import { Server, ListToolsRequestSchema, CallToolRequestSchema } from "@modelcontextprotocol/typescript-sdk";

const server = new Server(

{ name: "example-server", version: "1.0.0" },

{ capabilities: { tools: {} } } // we indicate this server will provide tools

);

Define the server’s functionality – resources or tools. Now, use the SDK to register what your server can do. For resources, you might register handlers for resource queries or prepare a list of resource URIs. For tools, you register tool definitions and the functions to execute them. In our add-two-numbers example, we will create a tool called "calculate_sum".

// Define the tool list handler

server.setRequestHandler(ListToolsRequestSchema, async () => {

return {

tools: [{

name: "calculate_sum",

description: "Add two numbers together",

inputSchema: {

type: "object",

properties: {

a: { type: "number" },

b: { type: "number" }

},

required: ["a", "b"]

}

}]

};

});

// Define the tool execution handler

server.setRequestHandler(CallToolRequestSchema, async (request) => {

if (request.params.name === "calculate_sum") {

const { a, b } = request.params.arguments;

return {

content: [

{ type: "text", text: String(a + b) }

]

};

}

throw new Error("Tool not found");

});

Choose and set up the transport (server runtime). Decide how your server will be run and communicate:

- For local usage (stdio): If you expect the host to launch your server as a subprocess, you don’t need much additional code – typically, you just call something like

server.connect(new StdioServerTransport())to start listening on studio. - For network usage (SSE): You need to host an HTTP endpoint. In a Node/Express setup, you would create an

/sseGET endpoint that, when hit by a client, creates anSSEServerTransportand callsserver.connect(transport)to tie the server to that client connection. Also set up a POST endpoint (e.g./messages) that the client will use to send JSON-RPC requests – this endpoint just passes those requests to the transport handler. The key point is that with SSE, you are effectively implementing a tiny protocol bridge: one route to establish the stream (and internally callserver.connectwhen a client connects) and one route to accept incoming JSON messages.

Using an AI host (e.g. Claude Desktop or Copilot): Configure the host to use your server. For Claude Desktop, add your server to the mcpServers section of Claude’s config. For example, if your server is a standalone executable my-server accessible in PATH, you might add:

"mcpServers": {

"example": { "command": "my-server" }

}

You could then ask the AI, “Use the calculate_sum tool to add 5 and 7,” and if all goes well, it will invoke the tool and return the answer. (In practice, with such a simple tool, the AI might just do it in its head, but for testing you can force it by phrasing the request to ensure tool use.)

Deploy or integrate the server as needed

Once you are satisfied with your MCP server, consider how it will run in your target scenario. If it’s for personal use (e.g. connecting a local IDE to your stuff), you might just run it on demand. If it’s for a production agent or a team, you might deploy the server as a service (maybe a Docker container running your MCP server code on some internal host or cloud function). Because MCP is a standard, multiple hosts could use your server if they know its address or launch command. For example, a company could deploy a database MCP server on an internal network; both a Claude instance and a Copilot instance could connect to it to allow their AI agents to safely query the database. Always ensure any required credentials (API keys, etc.) are securely provided to the server (via environment variables or a vault) and that you’ve restricted its access (using the roots mechanism or internal checks) to only the intended scope.

Conclusion and Resources

The Model Context Protocol is a powerful development: by standardizing how AI models interact with external data and tools, it enables a plug-and-play approach to augmenting AI capabilities. We’ve covered what MCP is, how it works, and how to build an MCP server. From required libraries (e.g. the official SDKs in TypeScript/Python and others) to the architecture of client–server communication (JSON-RPC over stdio or SSE), MCP provides a clear framework for integration. Building an MCP server involves defining what data or actions you want to expose, implementing a few handler functions, and letting the protocol layer handle the rest. With the growing library of open-source MCP servers for everything from databases to DevOps, you can also study and reuse existing implementations

Resources:

https://modelcontextprotocol.io/introduction